Getting Started

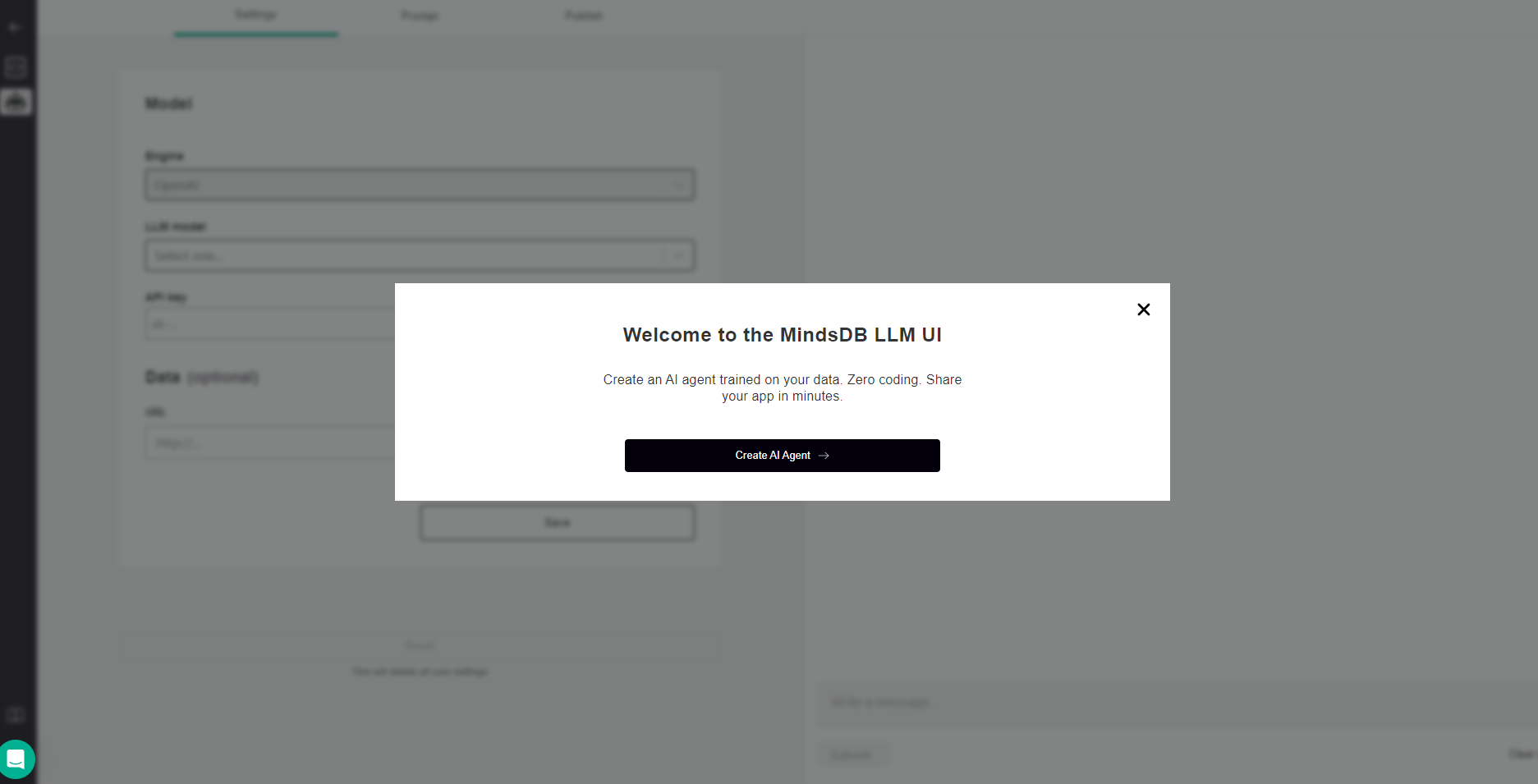

To access the LLM UI, click on the robot icon on the left menu. You’ll see a welcome screen.

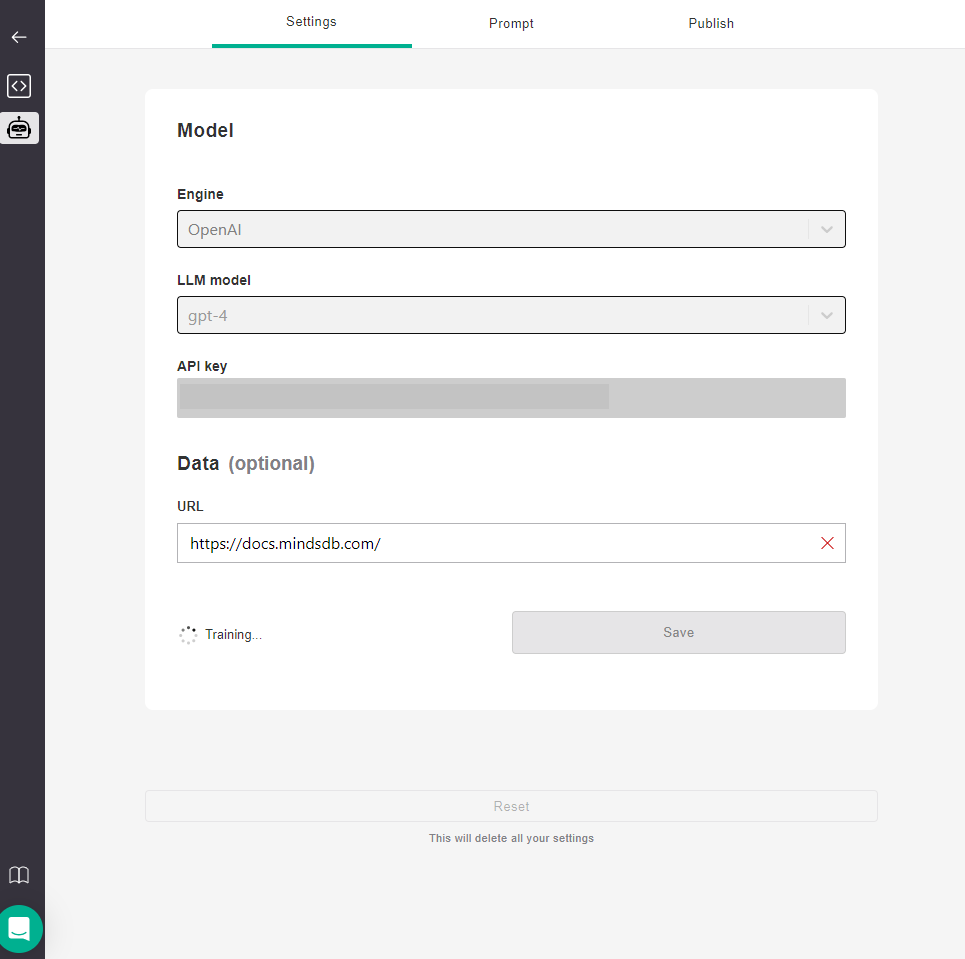

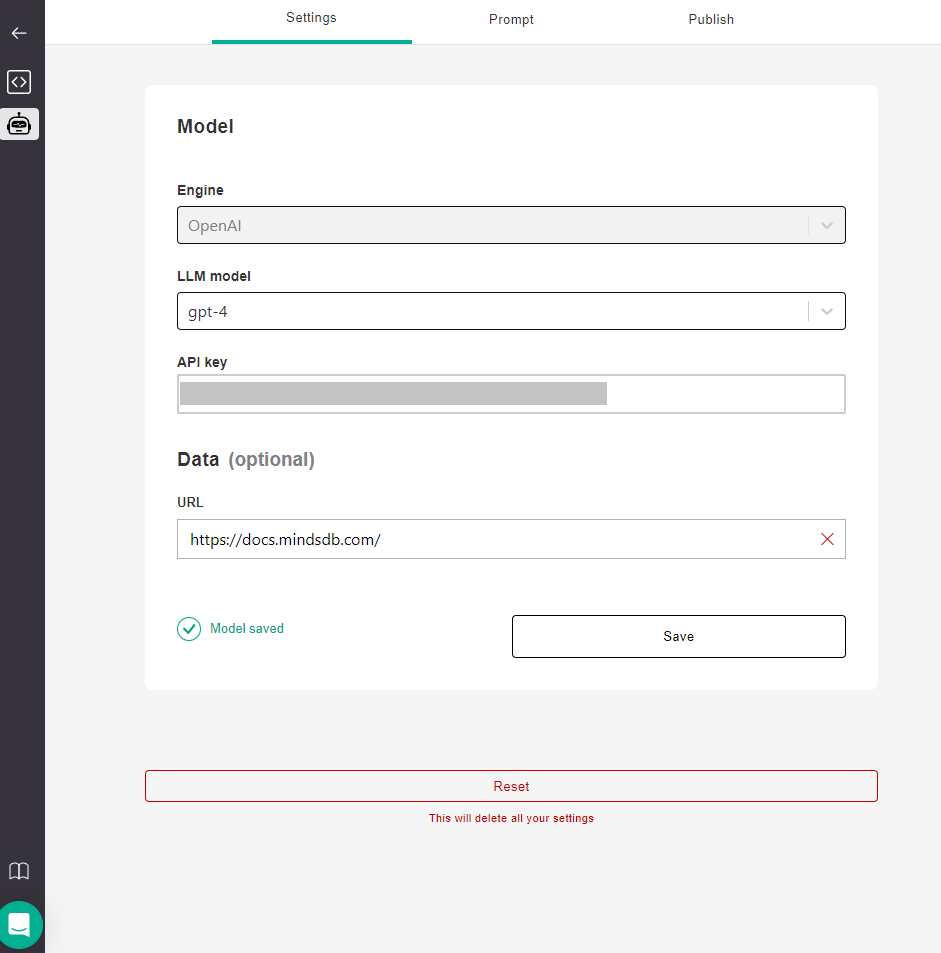

- In the Settings tab, under Model, you can choose engine, model, and provide an API key. And, under Data, you can provide a URL to be used for training the chatbot.

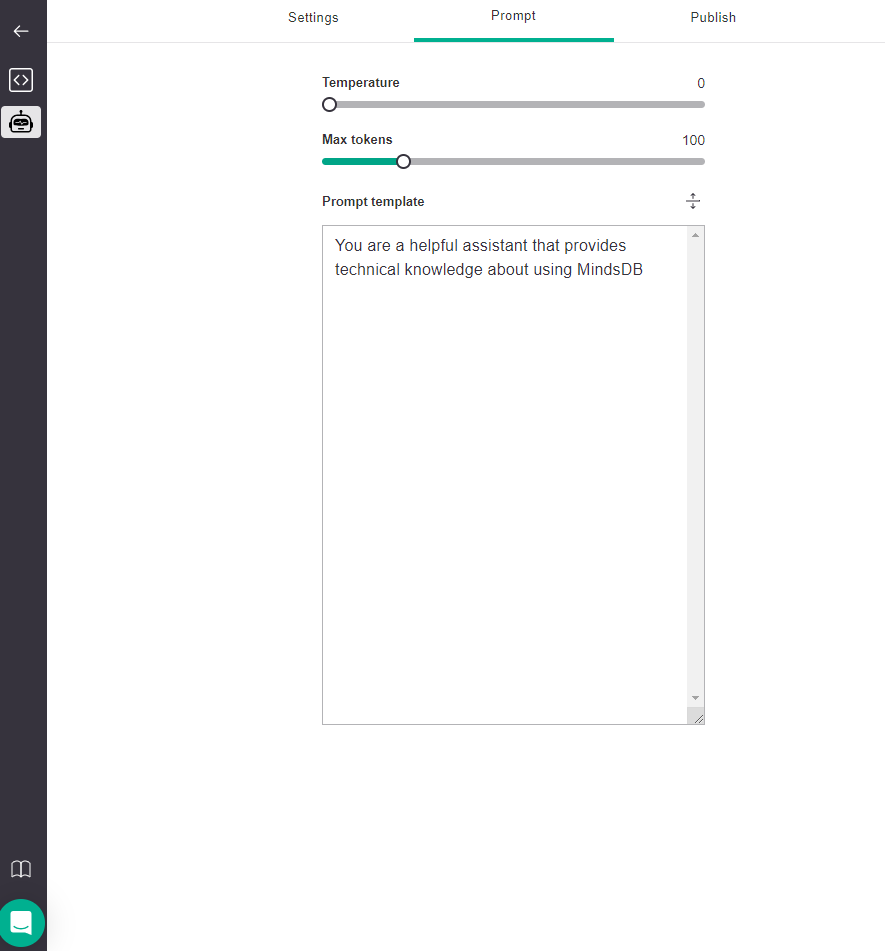

- In the Prompt tab, you can set Temperature and Max tokens parameters, and provide Prompt template message to the model. Once you completed up to this point, you can chat with your bot.

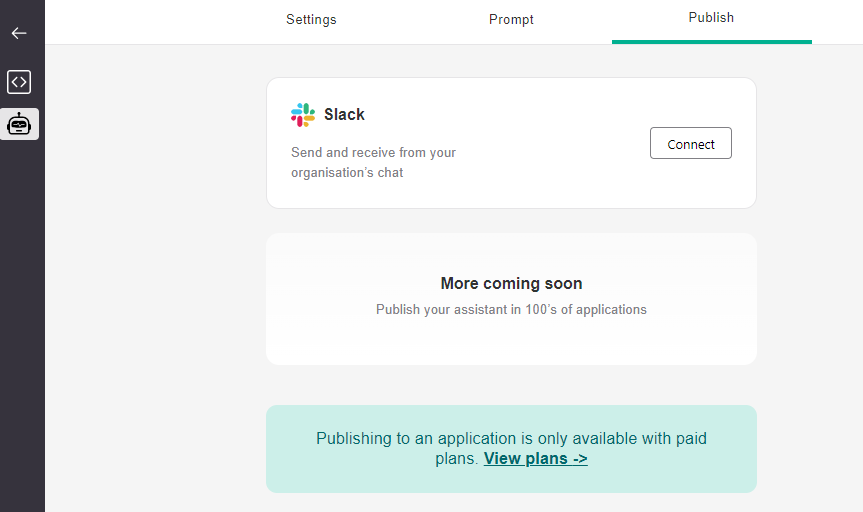

- In the Publish tab, you can publish your chatbot to a chat application like Slack.

Settings

In the Settings tab, you can provide information about engine and model that you want to use.Currently, we offer OpenAI models that require you to provide an API key.

Model saved message as below.

Prompt

In the Prompt tab, you can set up a prompt message to give general directions to the chatbot. You can also define thetemperature and max_tokens values that affect responses.

temperature values indicate that the model takes fewer risks and completions are more accurate and deterministic. On the other hand, high temperature values result in more diverse completions. And max_tokens value should be as close to the expected response size as possible.

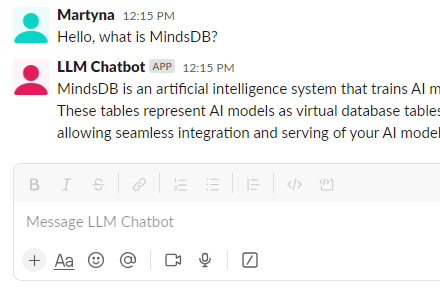

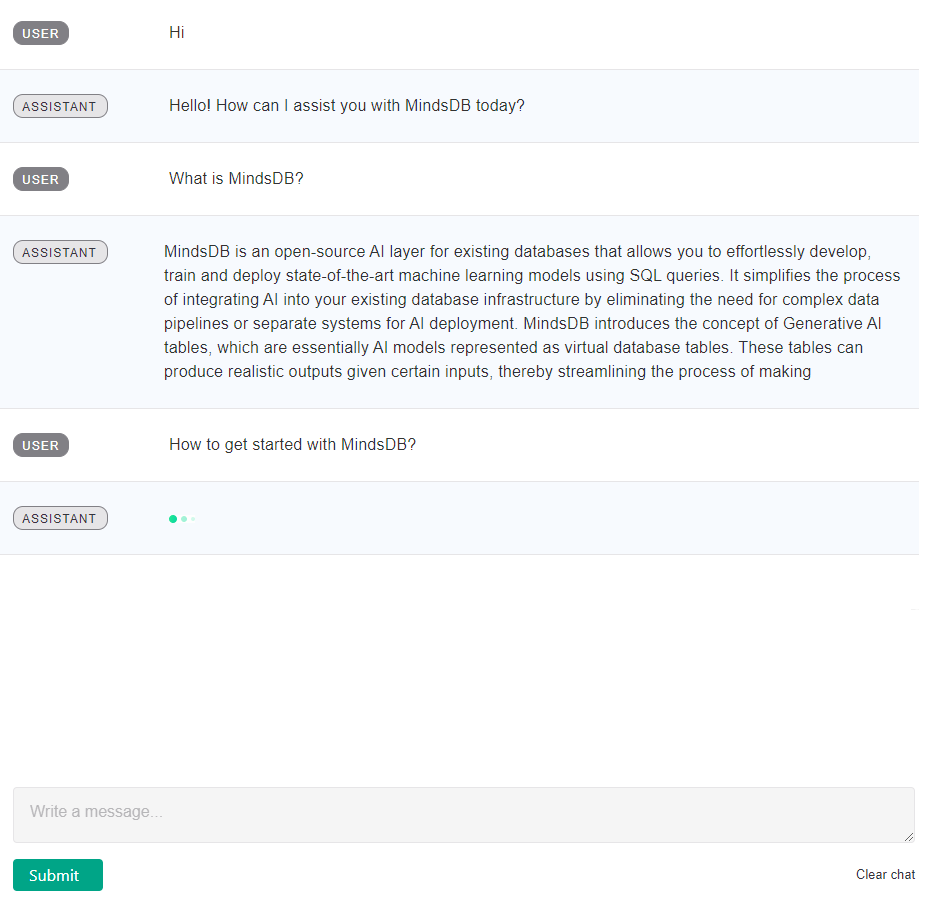

Now we are ready to talk with the chatbot.

The right half of the screen is a chat interface where you can submit messages and receive replies.

Publish

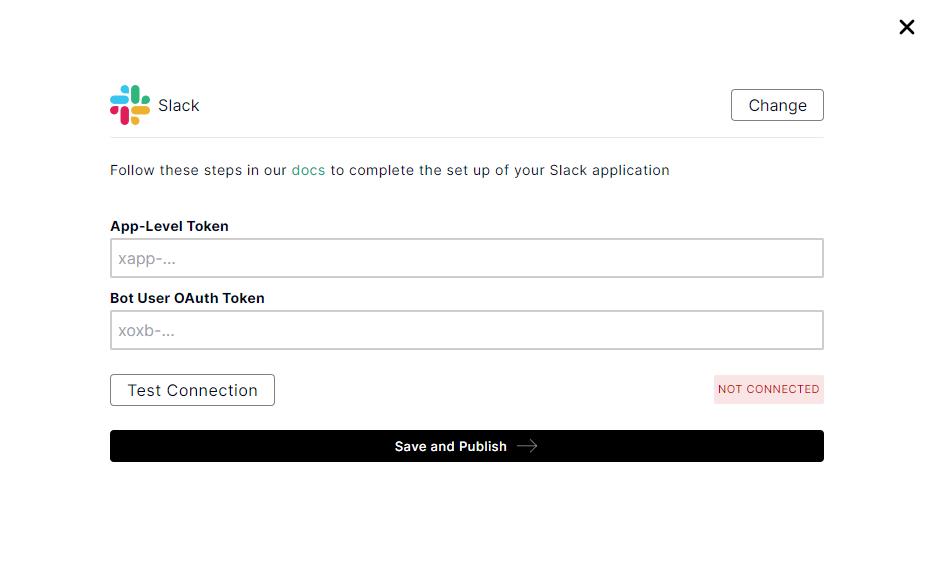

You can integrate this chatbot into chat applications.Currently, we support the Slack app.

Bot User OAuth Token and App-Level Token.

Slack App Setup

Follow the instructions below to set up the Slack app and generate required tokens.- Follow this link and sign in with your Slack account.

- Create a new app

From scratchor select an existing app.- Please note that the following instructions support apps created

From scratch. - For apps created

From an app manifest, please follow the Slack docs here.

- Please note that the following instructions support apps created

- Go to Basic Information under Settings.

- Under App-Level Tokens, click on Generate Token and Scopes.

- Name the token

socketand add theconnections:writescope. - Copy and save the

xapp-...token - you’ll need it to publish the chatbot.

- Go to Socket Mode under Settings and toggle the button to Enable Socket Mode.

- Go to OAuth & Permissions under Features.

- Add the following Bot Token Scopes:

- channels:history

- channels:read

- chat:write

- groups:read

- im:history

- im:read

- im:write

- mpim:read

- users.profile:read

- In the OAuth Tokens for Your Workspace section, click on Install to Workspace and then Allow.

- Copy and save the

xoxb-...token - you’ll need it to publish the chatbot.

- Add the following Bot Token Scopes:

- Go to App Home under Features and click on the checkbox to Allow users to send Slash commands and messages from the messages tab.

- Go to Event Subscriptions under Features.

- Toggle the button to Enable Events.

- Under Subscribe to bot events, click on Add Bot User Event and add

message.im. - Click on Save Changes.